Utilities Client

Project Overview

Synopsis

On this project, I was the sole UX/UI designer for two products concurrently: a Call Center Analytics Dashboard application and a Form Processing application. I prototyped 104 high fidelity screens and 45 custom design system components for the 2 applications, in accordance with brand guidelines. I also served as the bridge between offshore technology partners and local business partners to ensure prioritization of features and quality assurance testing throughout the build.

The successful design and implementation of these 2 products enabled customer service managers to view customer call data in a meaningful way, gaining invaluable insights into customer intent and sentiment. Additionally, it expedited the processing of thousands of paper forms a month and saved significant employee labor hours.

Problem

As a major utilities provider, our client’s customer call center was receiving hundreds of thousands of calls over the course of the year; however, the customer care center and process improvement teams previously had no way to gain insights on how these calls were being handled. They were unable to identify potential issues to troubleshoot in order to elevate the customer experience.

In addition, the client has a low-income energy rate assistance program that requires customers to submit recertification forms through either an online portal or paper mail. Recertifications sent back through mail were requiring timely manual review and data entry, and volume had significantly exceeded staff capacity. Staff labor hours were through the roof, and a large backlog of unreviewed forms was starting to form.

My Role

UX/UI Designer

Timeline

4 months

Completed in June, 2023

Team

Myself, alongside Product, Development and Consumer Group teams

Tools

Figma, Zeplin, AntDesign, React.js, HTML/CSS, Photoshop, ADO

Call Center Analytics App

Form Processing App

Call Center Analytics Dashboard Mockups (4)

Information redacted to maintain client confidentiality.

1. Dashboard

2. Call List

3. Call Details

4. Keyword Search

Form Processing App Mockups (12)

Information redacted to maintain client confidentiality.

1. Define Forms - Upload New Form

2. Define Forms - Form Uploaded

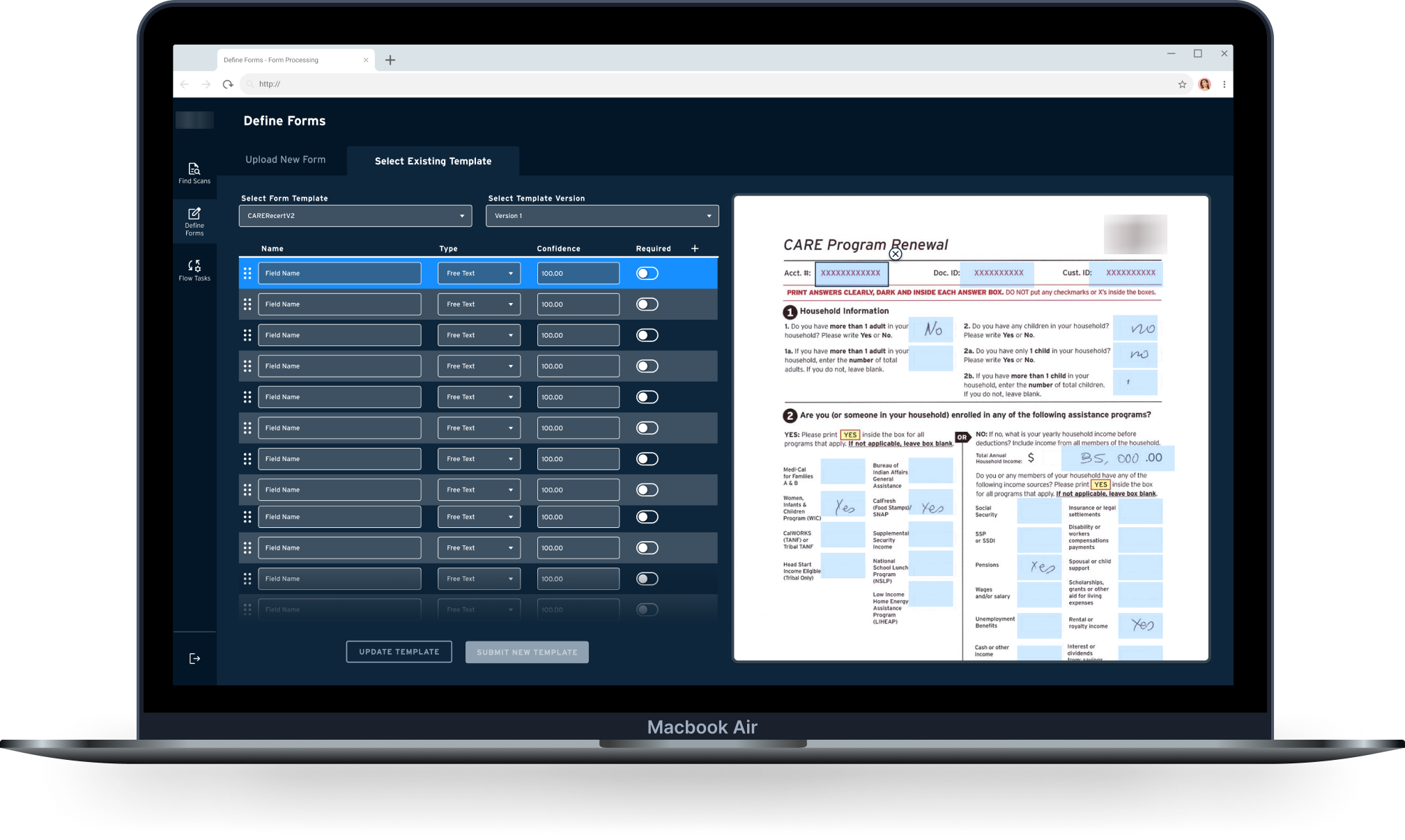

3. Define Forms - Select Existing Template

4. Define Forms - Modify Existing Template

5. Flow Tasks - Add New (Empty)

6. Flow Tasks - Add New (Template Selected)

7. Flow Tasks - Add New (Fields Matched)

8. Flow Tasks - View Task

9. Find Scans - Filter Search

10. Find Scans - Search Results

11. Find Scans - Form Selected (Collapsed)

12. Find Scans - Form Selected (Expanded)

Design Process

Overview of Applications

Proof of Concepts (POC)

2. Call Center Analytics Dashboard

Defining Requirements

Iterative Wireframing

Prototyping

Build & QA

3. Form Processing Application

Updating Requirements

J

Prototyping & Testing

Build & QA

Creating UI Components

Call Center Analytics

Form Processing

1. Background Information

Overview of Applications

Over the course of this project, I managed design work on two applications simultaneously: the Call Center Analytics Dashboard and the Form Processing Application.

Call Center Analytics Dashboard

The Call Center Analytics application leveraged an intricate Natural Language Assessment (NLA) pipeline to transcribe customer service calls to text and analyze call meta-data. Then, using AI machine learning models, our data scientists classified and categorized the call transcription data, generating relevant 'topic tags' for each call.

I was responsible for designing and managing the implementation of four filterable “dashboard” screens that enabled stakeholders to view this data in a meaningful way, gaining invaluable insights into customer intent and sentiment:

Aggregated Analytics: A filterable, high level overview of key metrics across all calls; includes visualizations of call volume over time, call sentiment broken down by duration, and ‘topic tag’ frequency.

Call List: A filterable listing of all customer service calls, displaying key details at a high level, and allowing users to select a call for further information.

Call Details: When a user selects a call, this screen displays all relevant metadata, a call summary, and a full call transcript with audio. Additionally, data visualizations display the length of each call “segment”, as well as customer and agent sentiment for each line of dialogue.

Keyword Search: This view allows power users to enter in key phrases and obtain high level metrics/analytics for calls containing these wordstrings

Form Processing Application

The Form Processing product leveraged Optical Character Recognition (OCR) to read scanned PDFs of paper forms, process the handwritten information, and standardize the data.

I designed and helped implement a custom UI that enabled users to configure batch jobs for processing, search for forms containing errors, and display form data for quick and efficient review. This expedited the processing of thousands of forms a month and saved significant employee labor. The experience consisted of the following screens:

Define Forms: Users may upload and define a new form template, configuring it to a file containing the corresponding forms; or, they may modify a pre-defined sample template.

Flow Tasks: This screen enables users to configure a batch job that will run all the forms through the defined form template and output them to SAP.

Find Scans: Once scans have been processed, the ones that fail to process will be found here. Users can easily find scans, identify errors, and manually review them.

Proof of Concept (POC) Designs

At project inception, I was provided with high level proof of concept designs for 2 screens of each application—exact requirements had yet to be confirmed with consumer group stakeholders and backend teams. As such, it was my responsibility to quickly collaborate with business stakeholders and developers to re-imagine and scale these designs, ensuring they met business objectives and were technically feasible.

Call Center Analytics Dashboard

I was provided with proof of concept designs for 2 screens: Call List and Call Details.

Call Center Analytics - Call List (POC)

Call Center Analytics - Call Details (POC)

Form Processing Application

I was provided with proof of concept designs for 2 screens: Define Forms and Find Scans.

Form Processing Application - Define Forms (POC)

Form Processing Application - Find Scans (POC)

2. Call Center Analytics Dashboard

Defining Requirements

In addition to the POC designs above, I received a list of functional requirements for the Call Details and Call List screens derived from user interviews conducted with stakeholders from the Customer Care Team: the intended users of the Call Center Analytics Dashboard. However, these requirements had yet to be finalized, and they did not address the additional screens needed to complete the user experience.

Audit of POC Interface

To evaluate the prior progress made in meeting the drafted requirements, I conducted an audit of the provided designs. I documented the functional requirements that had been fulfilled, identified those that were unmet, and highlighted potential technical challenges based on my understanding of the backend data being pulled.

Call Details Functional Requirements (Annotations)

Call List Functional Requirements (Annotations)

Call Details Interface (Annotations)

Call List Interface (Annotations)

Affinity Diagram

I then sought out access to the raw user interview data collected by the POC team, conducting my own analysis of the data by translating and organizing it into an affinity diagram. I created high level groupings based on similar user responses, as well as ordered the groups chronologically from the beginning of a call to end to develop a full picture of the call experience.

Key Findings

Users would like to understand the intent and outcome of each call

Users would like to understand customer sentiment for each call, at each stage of the call

Users would like to understand how call duration relates to customer sentiment

Users would like to understand which topics are occurring most often in calls

Users would like to view call volume over the course of 12 months

Collaboration on Requirements

Next, I discussed my analysis with the product owner, collaborating closely to modify the functional requirements that were initially provided for the Call Details and Call List screens to incorporate my findings. We also leveraged this analysis to draft a new set of functional requirements for a third screen: the Aggregated Analytics dashboard.

To ensure technical feasibility, we reviewed these revised requirements with our data scientists and backend developers and made additional adjustments accordingly. Finally, we presented the new requirements to our consumer group stakeholders, making further modifications based on their feedback.

Iterative Wireframing

To help consumer group stakeholders clearly visualize the proposed requirements, I created a series of low fidelity wireframes demonstrating potential functionality; we then engaged in multiple discussions regarding the requirements, business objectives, and the overall user experience.

Aggregated Analytics Iterations (Round 1)

I explored various means of visually presenting data to best drive business insights, advocating for the most user-friendly designs throughout the process. By keeping the designs low fidelity at this stage, I was able to iterate quickly, enabling me to consistently to adapt to incoming requirements and shifting needs.

Aggregated Analytics Iterations (Round 2)

Prototyping

After multiple rounds of low fidelity iterations, I obtained buy-in on a design direction, and the user experience was ultimately segmented into four screens: Call List, Call Details, Aggregated Analytics, and Keyword Search. I then stylized the UI by creating all custom UI Components, ensuring accordance with branding guidelines, as well as designed fully functional prototypes to demonstrate interactions to frontend developers and stakeholders.

The flows and microinteractions I designed for each screen showcased the following functionality:

Call List: Search a filterable listing of all customer service calls with key metadata displayed at the highest level

Call Details: Access additional call details for a particular call, including all relevant metadata, a call summary, a full call transcript with audio, the length of each call “segment”, as well as customer and agent sentiment for each line of dialogue.

Aggregated Analytics: Access a filterable overview of key metrics across all calls, including visualizations of call volume over time, call sentiment broken down by call duration, and ‘topic tag’ occurrence frequency.

Keyword Search: Enter in key phrases and obtain high level metrics/analytics for calls containing the wordstrings.

Post-MVP Screens

In addition to the prototypes I created for the MVP build, I also provided designs for the future state of the product.In particular, I designed a word cloud feature for the Aggregated Analytics dashboard that would enable users to more clearly view ‘topic tag’ occurrences and co-occurrences across calls, driving data insights.

Aggregated Analytics - Word Cloud (No Tags Selected)

Aggregated Analytics - Word Cloud (“Billing” Tag)

Aggregated Analytics - Word Cloud (“Billing” > “Meter” Co-Occurrence)

Demo of Prototypes

You may view a demo video of the prototype or reference the High Fidelity Mockups above for all screens.

Build & QA

Prior to my involvement in the project, frontend development had already begun based on the Call List and Call Details POC Designs displayed above. The UI components were rudimentarily stylized, and the build did not reflect our revised requirements and new designs. Therefore, I collaborated with the frontend team to update the existing build, as well as supported new development of the Aggregated Analytics and Keyword Search screens, which were built in real time as I completed prototypes for the new user experience.

To facilitate this process, I worked with the product manager to create several “mini-sprints” of Enhancement/Build stories. Additionally, we collaborated closely with the QA tester to create Bug Fix stories to address UI functionality issues and error handling.

At this stage of the project, we did not have an active subscription to Zeplin. However, for each story, I provided a link to a Figma frame containing detailed visual annotations. For features that involved particularly complex interactions, I recorded demonstration videos and included links to those, as well.

Build/Enhancement Story - Aggregated Analytics (Ex. 1)

Bug Fix Story - Call Details (Ex. 3)

Build/Enhancement Story - Call List (Ex. 2)

Bug Fix Story - Aggregated Analytics (Ex. 4)

Ultimately, we conducted 7 “mini-sprints” of stories to complete the build and ensure an optimal user experience. Throughout that time, I personally QA tested each of the completed stories and communicated frequently with the frontend development team to quickly rectify issues.

Build/Enhancement & Bug Fix Stories

Build/Enhancement & Bug Fix Stories (2)

3. Form Processing Application

Updating Requirements

Proof of Concept Requirements

Before I joined the project, the POC requirements for the Form Processing Application had been outlined and approved; however, designs had been limited to just two static screens: Define Forms and Find Scans. Therefore, I did not need to explicitly define requirements; rather, it was my responsibility to prototype all the interactions for those two screens, as well as design one additional screen with full interactivity: Flow Tasks.

Collaborating with Consumer Group Stakeholders

The POC requirements were relatively high level, and there were still many scoping and business process intricacies that had yet to be identified and accounted for to make the product truly functional. Due to this, I worked closely with our consumer group stakeholders to discuss business process details and flexibly explore design solutions. I kept detailed logs of each change in order to pass the information along to the product manager for enhancement stories.

Design Change Log (Ex. 1)

Design Change Log (Ex. 2)

Understanding the Flow of Data

Additionally, the POC requirements were defined prior to the completion of the backend infrastructure and needed to be updated accordingly. As I began my designs, I engaged with developers to gain insights into the flow of data on the backend and frontend:

A form template is uploaded through the UI and processed through the optical character recognition (OCR) application to identify response fields. Then, the user configures the form template to a file in the scanning software via the UI.

On the UI, the user then configures a flow task for the form template, uploading an output schema file and matching the schema fields to the textract response fields to properly format the data for SAP processing.

Next, the user scans a batch of forms to the scanning software file previously configured in the corresponding form template. The flow task automatically runs, and the forms are processed by SAP.

Successfully processed forms go to a designated s3 bucket.

Forms with errors go to a different s3 bucket to be displayed on the UI for review.

Various requirements updates were necessary to ensure the UI properly accommodated this data flow—in particular for the Flow Tasks screen. I advocated for maintaining a usable, simple interface in all feature implementations, consistently iterating on my designs to ensure maximum usability despite complicated logic.

User Flows

After understanding the flow of data on the backend, it was essential to establish the step-by-step flow for users on the front end. To achieve this, I developed a comprehensive task flow diagram that accounted for all possible interactions. This established a clear visual of the user journey for all stakeholders involved.

Prototyping & Testing

Given the highly process-oriented nature of this application, it was imperative to create detailed prototypes featuring individual frames for each microinteraction on every screen: Define Forms, Flow Tasks, and Find Scans. This helped frontend developers further understand the sequential flow of interactions to complete tasks on the UI, enabling them to code with accuracy.

It’s important to note that frontend development work had already been completed on the majority of UI components prior to my involvement on the project. Since the client did not have a sufficient design system and the POC designs were not stylized, the developers built everything with standard AntDesign components. Unfortunately, we determined there was not enough time for developers to restylize them for MVP; therefore, I designed all of my initial prototypes with unstylized AntDesign components for their reference.

User Flows and Interactions

Define Forms - Upload New Form (Prototypes)

Define Forms - Select Existing Template (Prototypes)

Flow Tasks (Prototypes)

Find Scans (Prototypes)

Post MVP Stylization

Though there wasn't time during MVP build out a stylized UI, I nonetheless crafted Custom UI Components and revamped my prototypes, accordingly. I systematically redesigned every screen using the new stylized components, ensuring that developers could vividly visualize and comprehend all the prototyped states of the restyled elements.

Define Forms - Upload New Form (Prototypes)

Flow Tasks (Prototypes)

Define Forms - Select Existing Template (Prototypes)

Find Scans (Prototypes)

Guerrilla User Testing

For this particular product, we were fortunate that our consumer group stakeholders were also the intended users of the application. Throughout the process of refining my prototypes and incorporating updates to align with business processes, I had the opportunity to present my designs to our main consumer group stakeholder/user on three distinct occasions.

During these sessions, she engaged with the prototypes to execute the following tasks, offering immediate feedback on any inaccuracies or pain points she encountered:

Upload a new form template and define form fields (draw bounding box, change data format, change confidence level, etc.)

Configure a new flow task and match document fields to Output Schema fields.

Find a scan with errors from a particular date and manually rectify the error.

Additionally, I was able to send the prototypes to her offline for additional feedback. As a result, I was able to incorporate this feedback in to my designs, as well as in the build via enhancement stories.

Demo of Prototypes

You may view a demo video of the prototype or reference the High Fidelity Mockups above for all screens.

Build & QA

At project inception, only the UI components had been developed, and there was no product in existence. To address this, I collaborated with the product manager to plan several "mini-sprints" of build stories to develop the three prototyped screens along with their respective microinteractions.

Enhancements and Bug Fixes

Throughout development, I navigated ongoing requests and alterations in scope from our consumer group stakeholders and incorporated user feedback obtained through guerrilla user testing, altering my designs accordingly. In turn, I collaborated with the product manager to translate these changes into enhancement stories for the frontend development team. Working closely with the QA tester, I also helped support Bug Fix stories to rectify UI functionality issues.

At this stage of the project, we did not have an active subscription to Zeplin. However, for each story, I provided a link to a Figma frame containing detailed visual annotations. For features that involved particularly complex interactions, I recorded demonstration videos and included links to those, as well.

Define Forms - Build/Enhancement Story (Ex. 1)

Flow Tasks - Bug Fix Story (Ex. 3)

Find Scans - Build/Enhancement Story (Ex. 2)

Find Scans - Bug Fix Story (Ex. 4)

Ultimately, we conducted 7 “mini-sprints” of stories to complete the build and ensure an optimal user experience. Throughout that time, I personally QA tested each of the completed stories and communicated frequently with the frontend development team to quickly rectify issues.

Build/Enhancement & Bug Fix Stories

Error Handling and Alerts

Given the multitude of interactions involved in the application's design, I also collaborated extensively with frontend developers and QA testers to devise comprehensive error handling for all potential scenarios. I established a centralized repository for all error modals, which facilitated easy reference and development.

4. Design System

Creating UI Components

Mapping Application Design Inspiration

At the beginning of the project, there was no established design system in place for designers and developers to reference. There had been a recent initiative to achieve visual cohesion across multiple cloud products, and only one application had adopted the new visual direction. To address this gap, I collaborated with the designer of that application, collecting a small library of UI components and typography details.

The application’s primary feature was a mapping dashboard and it had limited relation to my two projects; however, I expanded upon the interaction states of its components to suit our products, using both these gathered resources and the application itself as visual inspiration for crafting an expanded library of components for the Call Center Analytics Dashboard and Form Processing Application.

Map Dashboard Application (Prototype)

Pre-Existing Components

Call Center Analytics Dashboard

The initial POC build for the Call Center Analytics Dashboard was stylized to somewhat resemble the look of the mapping application mentioned earlier—at that point, only the UI components visible on the interface in dev had been coded. Consequently, we opted to restyle the existing components to accurately align with the intended design direction showcased in the mapping application as part of MVP and use this new visual direction to complete the remainder of the build.

Designing Components

As I designed custom components for the Call Center Analytics Dashboard, I referenced the client’s branding guidelines and maintained the same visual treatments applied to the components in the mapping application above. I prototyped various React.js-based graph and filtration components, breaking down and documenting each interaction state for developers.

New UI Components

New UI Components (2)

As the developers built out new features, they used these new components, which helped ensure the integrity of the visual direction of the UI. Additionally, I provided the developers with detailed markups of what had previously been built in the dev environment for the Call List and Call Details screens, explaining which components to replace and where to change colors and typography. These updates were addressed through a series of enhancement stories throughout the build.

Call List (Visual Design Markup)

Call Details (Visual Design Markup)

Form Processing Application

Designing Components

As noted, the majority of the UI components for this application were initially developed as unstylized AntDesign components, and the strategic decision was made not to restyle the developed UI for the MVP phase. Nevertheless, I proceeded to redesign and restylize each component, as well as the overall visual direction of the application. This involved referencing the client’s branding guidelines and maintaining visual treatments consistent with the components in the Mapping Application mentioned above.

Ultimately, at the end of the project, I gained access to Zeplin and uploaded these components, as well as the restylized Prototype Screens for developers’ reference. Furthermore, I collaborated with the product manager to provide resources for a backlog of user stories, ensuring developers could effectively complete the restyling efforts post-MVP.

Define Forms (Visual Design Markup)

Flow Tasks (Visual Design Markup)

Find Scans (Visual Design Markup)

Define Forms (New UI Components)

Flow Tasks (New UI Components)

Find Scans (New UI Components)

Table of Contents

Table of Contents